In the age of artificial intelligence, obtaining precise and useful information is key for business leaders and professionals across all areas. However, AI-generated answers often end up short or generic if the initial question is vague. The solution? Treat the interaction with AI as an iterative conversation, where the model can ask questions to clarify its doubts before answering. This simple shift in approach can improve the quality of information by orders of magnitude [1][2]. In this article we explore why inviting AI to ask questions can dramatically transform results, and how to apply this approach effectively.

What Are Iterative Prompts and Why Do They Matter?

An iterative prompt is an instruction that fosters a back-and-forth dialogue with AI. Instead of throwing a static question and accepting the first answer, we propose a cycle where the model first asks clarifying questions if something is unclear, and only then produces its final answer. This turns a single-round interaction into a process more akin to consulting: the AI acts like an advisor that first gathers details before offering recommendations [4].

Suggested prompt snippet (to use recurrently):

“Please ask all the questions you have to clarify, list them numbered to make it easy to indicate which one I’m answering, before generating the requested information.”

From Generic Answers to Precise Solutions: The Power of Iteration

Allowing AI to clarify its doubts leads to a notable change in output quality:

- Improved precision and specificity. Vague requests lead to vague answers; with clarifications, AI gathers the necessary details and provides more specific recommendations [1][2].

- Fewer faulty assumptions. Without iteration, AI may assume incorrect things. Preventive dialogue breaks assumptions and reduces the risk of errors or omissions [2][4].

- Dynamic, adaptive interaction. Instead of a static exchange, the conversation becomes iterative: AI adjusts its path based on your feedback, which often produces outcomes far superior to a single turn [1][2].

- Detection of nuances. By asking first, the model identifies special cases, constraints, or nuances that weren’t initially evident [4].

- Greater confidence in the final answer. Instructions like “ask until you’re 95% sure” push the model to self-assess its understanding before answering [2].

Practical Example: From the Initial Request to the Ideal Answer

- Request without iteration: “Prepare a marketing strategy for the launch of our new product.”

The AI may produce a generic plan with standard recommendations that might not fit the product or audience.

- Request with iteration (using the prompt snippet):

The AI first asks: “1) Who is the primary target audience? 2) What’s the marketing budget? 3) Priority channels? 4) What’s the product’s differentiating value proposition?”

After you answer those doubts, the AI produces a tailored, actionable strategy (appropriate channels, aligned messaging, realistic timeline, and focus on the differentiator) [1][2].

The quality leap from the first to the second answer is substantial, because the AI is no longer “guessing” the context.

Results and Impact (Evidence Summarized)

The improvement isn’t just anecdotal:

Recent research shows that teaching models to anticipate and model future conversation turns to formulate clarifying questions significantly increases user preference for the resulting answers [3].

Other work proposes strategies like “Rephrase and Respond,” where the model reformulates/clarifies the query before replying, improving understanding and performance on ambiguous tasks [4].

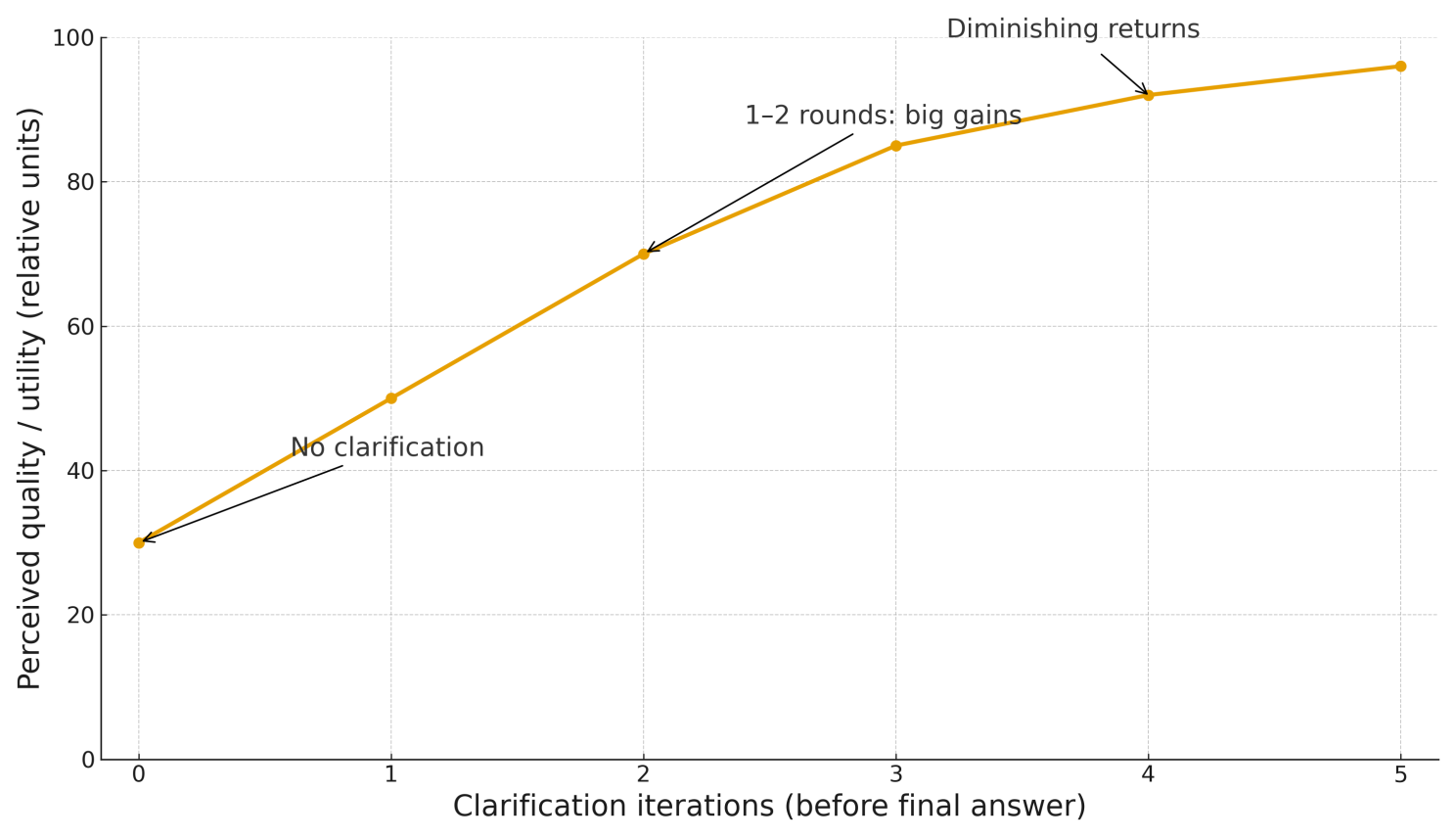

Figure 1: Conceptual representation of how the quality of AI responses improves as the number of clarification iterations increases. On the horizontal axis, the number of rounds of clarifying questions the model asks and the user answers before the final response; on the vertical axis, a scale of the quality of the generated information. We can see that with zero iterations (the AI responds directly without clarifying), quality is often low or generic. In contrast, with each additional round of clarifications, quality rises drastically, tending toward a much higher and more refined level of response after a certain number of iterations.

How to Apply It Step by Step

1. Add the prompt snippet at the end of your request (see above).

2. Let the AI ask and answer each question clearly (if something doesn’t apply, say so).

3. Iterate if needed (one or two rounds usually suffice).

4. Ask for the final answer once everything is clarified.

5. Reuse the context in future interactions: the model will learn your preferences and typical requirements.

Closing

Inviting the model to ask before answering turns AI into an expert collaborator: it reduces ambiguities, boosts precision, and speeds up decision-making with more relevant information. For leaders and teams, this practice translates into better outcomes with the same effort: a small change in how we ask that unlocks higher-value solutions.

References

[1] Reece Rogers – “6 Practical Tips for Using Anthropic’s Claude Chatbot” – [https://www.wired.com/story/six-practical-tips-for-using-anthropic-claude-chatbot/]

[2] Lewis C. Lin – “The Secret Weapon for Better AI Responses: Ask Until You’re 95% Sure” – [https://www.lewis-lin.com/blog/the-secret-weapon-for-better-ai-responses-ask-until-youre-95-sure]

[3] Michael J. Q. Zhang, William B. Knox, Eunsol Choi – “Modeling Future Conversation Turns to Teach LLMs to Ask Clarifying Questions” – [https://openreview.net/forum?id=cwuSAR7EKd]

[4] Yihe Deng et al. – “Rephrase and Respond: Let Large Language Models Ask Better Questions for Themselves” – [https://arxiv.org/abs/2304.05128]